Here is a little script I wrote and I though ought to be shared. I use it to upload static files like images, css and javascript so that they can be served by Amazon S3 instead of the main application server (like Google App Engine).

It’s written in Python and does interesting things like compressing and minifying what needs to be. It takes 3 arguments and as 2 options:

|

1 |

Usage: s3uploader.py [-xm] src_folder destination_bucket_name prefix |

- src_folder

- path to the local folder containing the static files to upload

- destination_bucket_name

- name of the S3 bucket to upload to (e.g. static.example.com)

- prefix

- a prefix to use for the destination key (kind of a folder on the destination bucket, I use it to specify a release version to defeat browser caching)

- x

- if set, the script will set a far future expiry for all files, otherwise the S3 default will be used (one day if I remember well)

- m

- if set, the script will minify css and javascript files

First you will have to install some dependencies, namely boto, jsmin and cssmin. Installation procedure will depend on your OS but on my Mac I do the following:

|

1 2 3 |

sudo easy_install boto sudo easy_install jsmin sudo easy_install cssmin |

And here is the script itself:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 |

#! /usr/bin/env python import os, sys, boto, mimetypes, zipfile, gzip from io import StringIO, BytesIO from optparse import OptionParser from jsmin import * from cssmin import * # Boto picks up configuration from the env. os.environ['AWS_ACCESS_KEY_ID'] = 'Your AWS access key id goes here' os.environ['AWS_SECRET_ACCESS_KEY'] = 'Your AWS secret access key goes here' # The list of content types to gzip, add more if needed COMPRESSIBLE = [ 'text/plain', 'text/csv', 'application/xml', 'application/javascript', 'text/css' ] def main(): parser = OptionParser(usage='usage: {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2}prog [options] src_folder destination_bucket_name prefix') parser.add_option('-x', '--expires', action='store_true', help='set far future expiry for all files') parser.add_option('-m', '--minify', action='store_true', help='minify javascript files') (options, args) = parser.parse_args() if len(args) != 3: parser.error("incorrect number of arguments") src_folder = os.path.normpath(args[0]) bucket_name = args[1] prefix = args[2] conn = boto.connect_s3() bucket = conn.get_bucket(bucket_name) namelist = [] for root, dirs, files in os.walk(src_folder): if files and not '.svn' in root: path = os.path.relpath(root, src_folder) namelist += [os.path.normpath(os.path.join(path, f)) for f in files] print 'Uploading {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2}d files to bucket {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2}s' {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2} (len(namelist), bucket.name) for name in namelist: content = open(os.path.join(src_folder, name)) key = bucket.new_key(os.path.join(prefix, name)) type, encoding = mimetypes.guess_type(name) type = type or 'application/octet-stream' headers = { 'Content-Type': type, 'x-amz-acl': 'public-read' } states = [type] if options.expires: # We only use HTTP 1.1 headers because they are relative to the time of download # instead of being hardcoded. headers['Cache-Control'] = 'max-age {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2}d' {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2} (3600 * 24 * 365) if options.minify and type == 'application/javascript': outs = StringIO() JavascriptMinify().minify(content, outs) content.close() content = outs.getvalue() if len(content) > 0 and content[0] == '\n': content = content[1:] content = BytesIO(content) states.append('minified') if options.minify and type == 'text/css': outs = cssmin(content.read()) content.close() content = outs if len(content) > 0 and content[0] == '\n': content = content[1:] content = BytesIO(content) states.append('minified') if type in COMPRESSIBLE: headers['Content-Encoding'] = 'gzip' compressed = StringIO() gz = gzip.GzipFile(filename=name, fileobj=compressed, mode='w') gz.writelines(content) gz.close() content.close content = BytesIO(compressed.getvalue()) states.append('gzipped') states = ', '.join(states) print '- {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2}s => {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2}s ({5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2}s)' {5f676304cfd4ae2259631a2f5a3ea815e87ae216a7b910a3d060a7b08502a4b2} (name, key.name, states) key.set_contents_from_file(content, headers) content.close(); if __name__ == '__main__': main() |

Thanks to Nico for the expiry trick :)

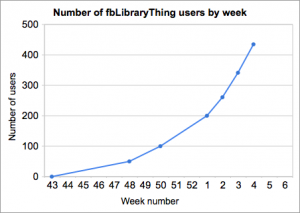

For those of you who were at the Lift conference 2008 you might remember of

For those of you who were at the Lift conference 2008 you might remember of